Benchmarking Large Language Models on NVIDIA H100 GPUs with CoreWeave (Part 1)

Por um escritor misterioso

Descrição

.png)

In The News — CoreWeave

The Story Behind CoreWeave's Rumored Rise to a $5-$8B Valuation, Up From $2B in April

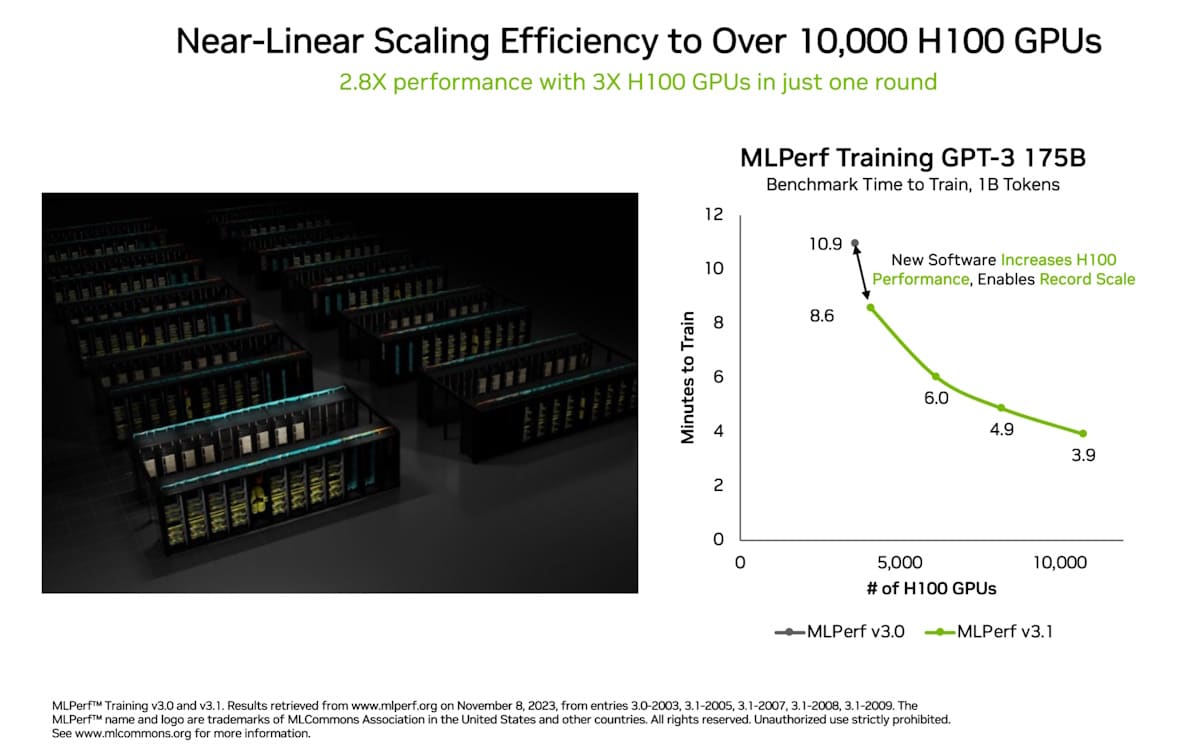

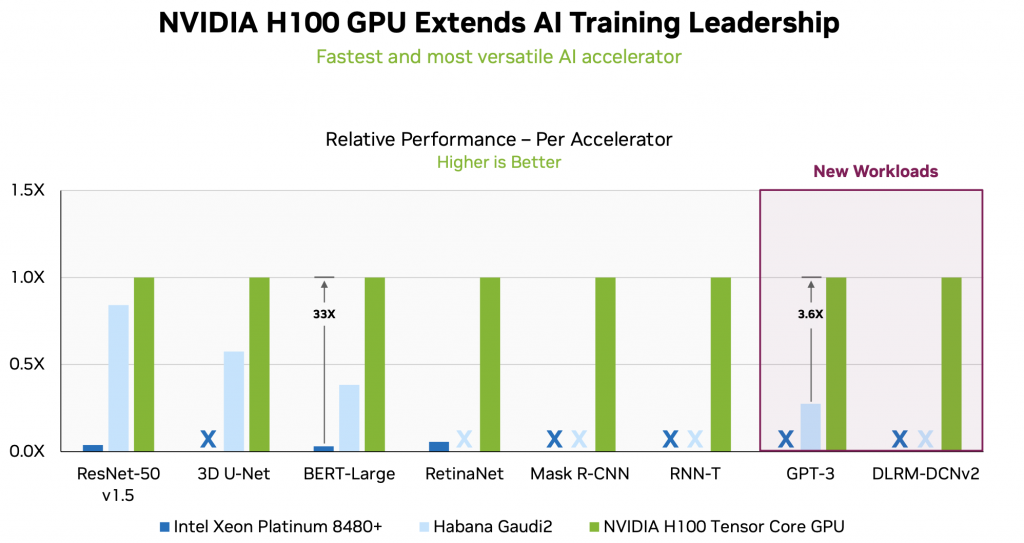

Acing the Test: NVIDIA Turbocharges Generative AI Training in MLPerf Benchmarks

Benchmarking Large Language Models on NVIDIA H100 GPUs with CoreWeave (Part 1)

NVIDIA H100 Dominates New MLPerf v3.0 Benchmark Results - annkmcd@gmail.com

AI Chips in 2024: Is Nvidia Poised to Lead The Race?

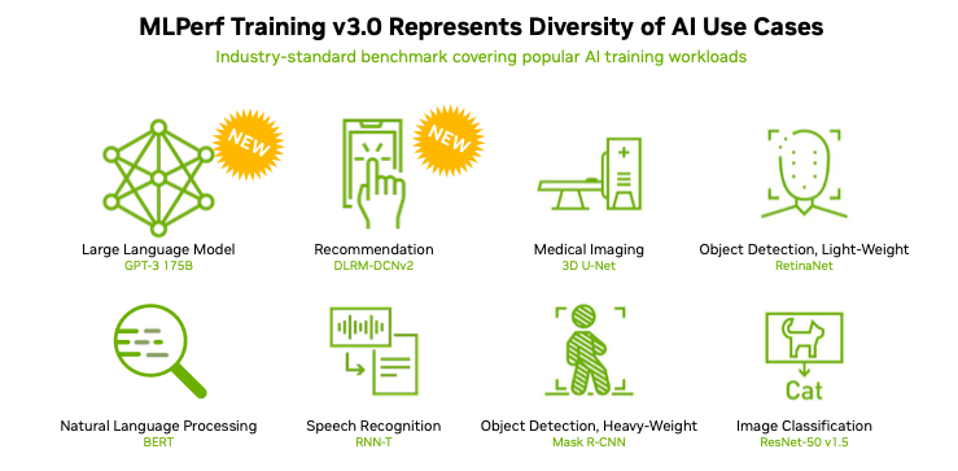

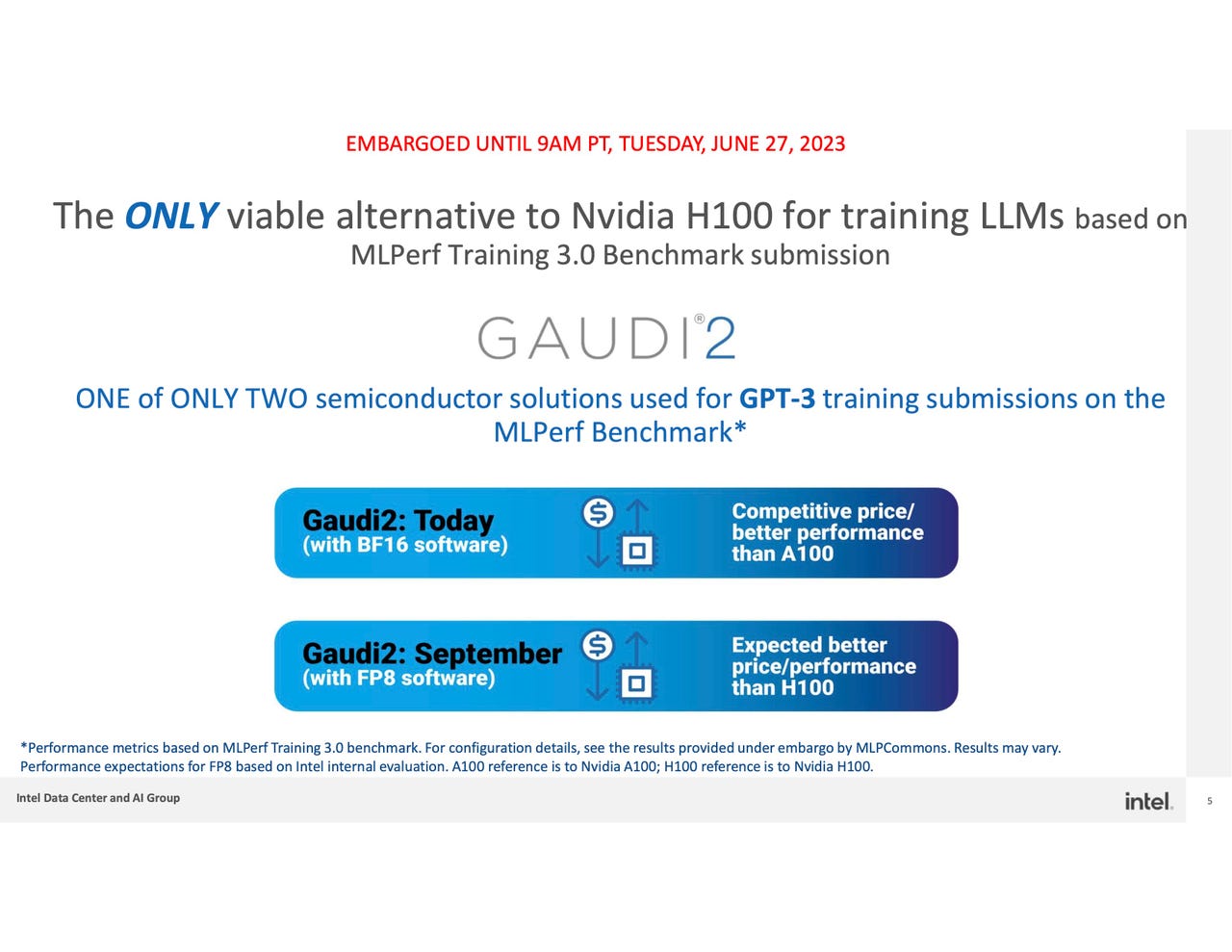

MLPerf Training 3.0 Showcases LLM; Nvidia Dominates, Intel/Habana Also Impress

Nvidia sweeps AI benchmarks, but Intel brings meaningful competition

OGAWA, Tadashi on X: => Benchmarking Large Language Models on NVIDIA H100 GPUs with CoreWeave, Part 1. Apr 27, 2023 H100 vs A100 BF16: 3.2x Bandwidth: 1.6x GPT training BF16: 2.2x (

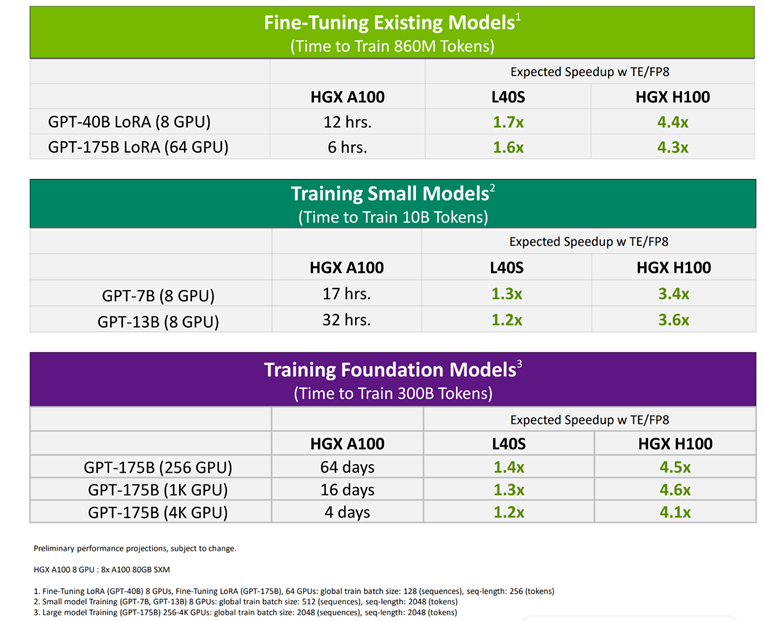

Choosing the Right GPU for LLM Inference and Training

Deploying a 1.3B GPT-3 Model with NVIDIA NeMo Framework

NVIDIA H100 GPUs Dominate MLPerf's Generative AI Benchmark

Achieving Top Inference Performance with the NVIDIA H100 Tensor Core GPU and NVIDIA TensorRT-LLM

de

por adulto (o preço varia de acordo com o tamanho do grupo)