PDF) Incorporating representation learning and multihead attention

Por um escritor misterioso

Descrição

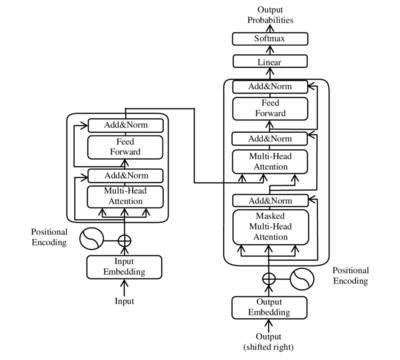

Incorporating representation learning and multihead attention to improve biomedical cross-sentence n-ary relation extraction, BMC Bioinformatics

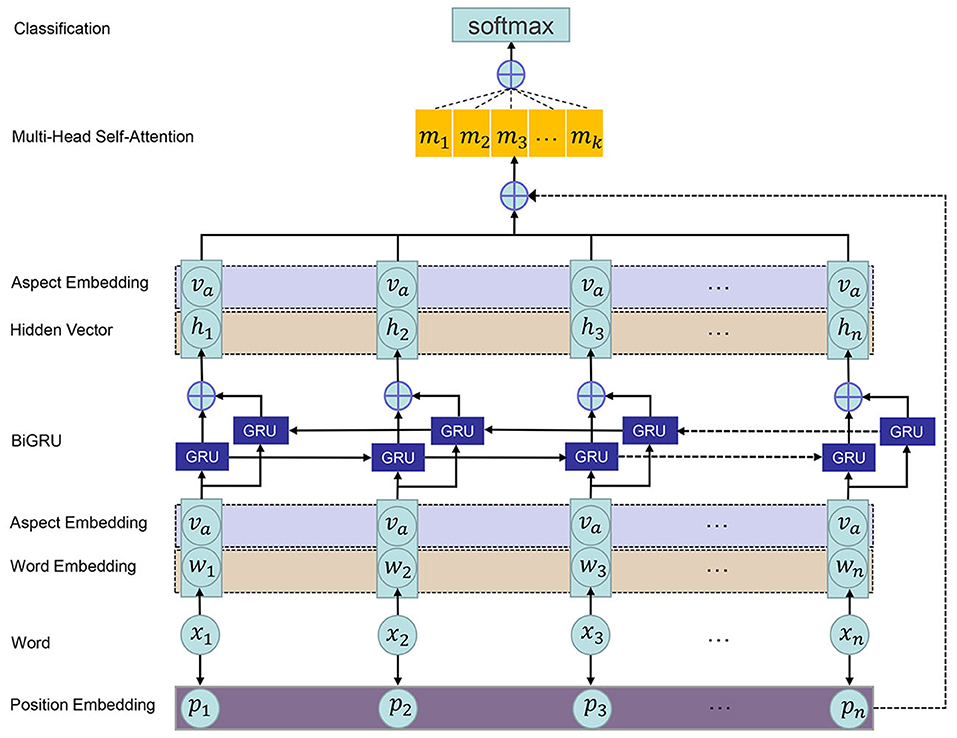

Frontiers Position-Enhanced Multi-Head Self-Attention Based Bidirectional Gated Recurrent Unit for Aspect-Level Sentiment Classification

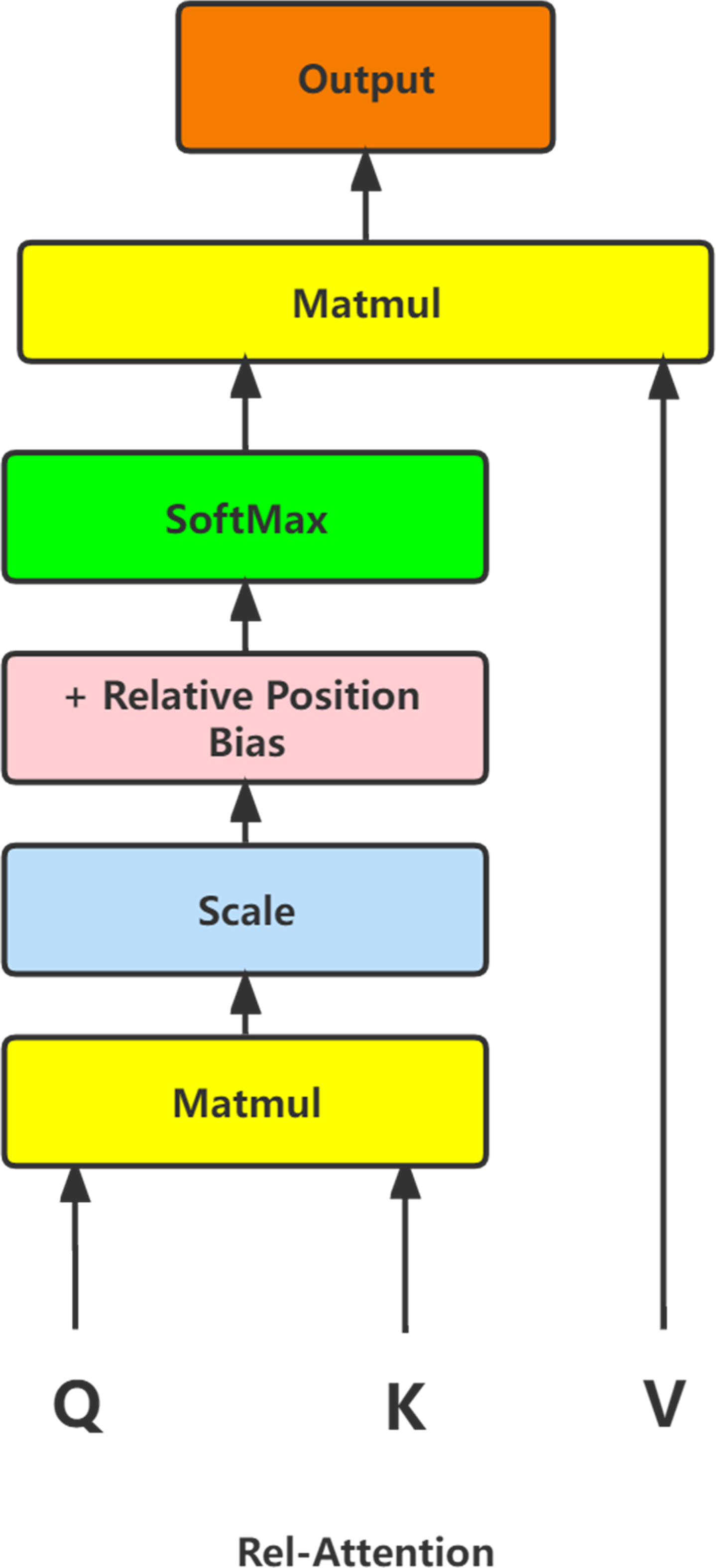

Image classification model based on large kernel attention mechanism and relative position self-attention mechanism [PeerJ]

Analysis of the mixed teaching of college physical education based on the health big data and blockchain technology [PeerJ]

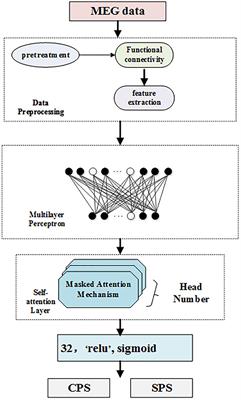

Frontiers Multi-Head Self-Attention Model for Classification of Temporal Lobe Epilepsy Subtypes

Multi-head enhanced self-attention network for novelty detection - ScienceDirect

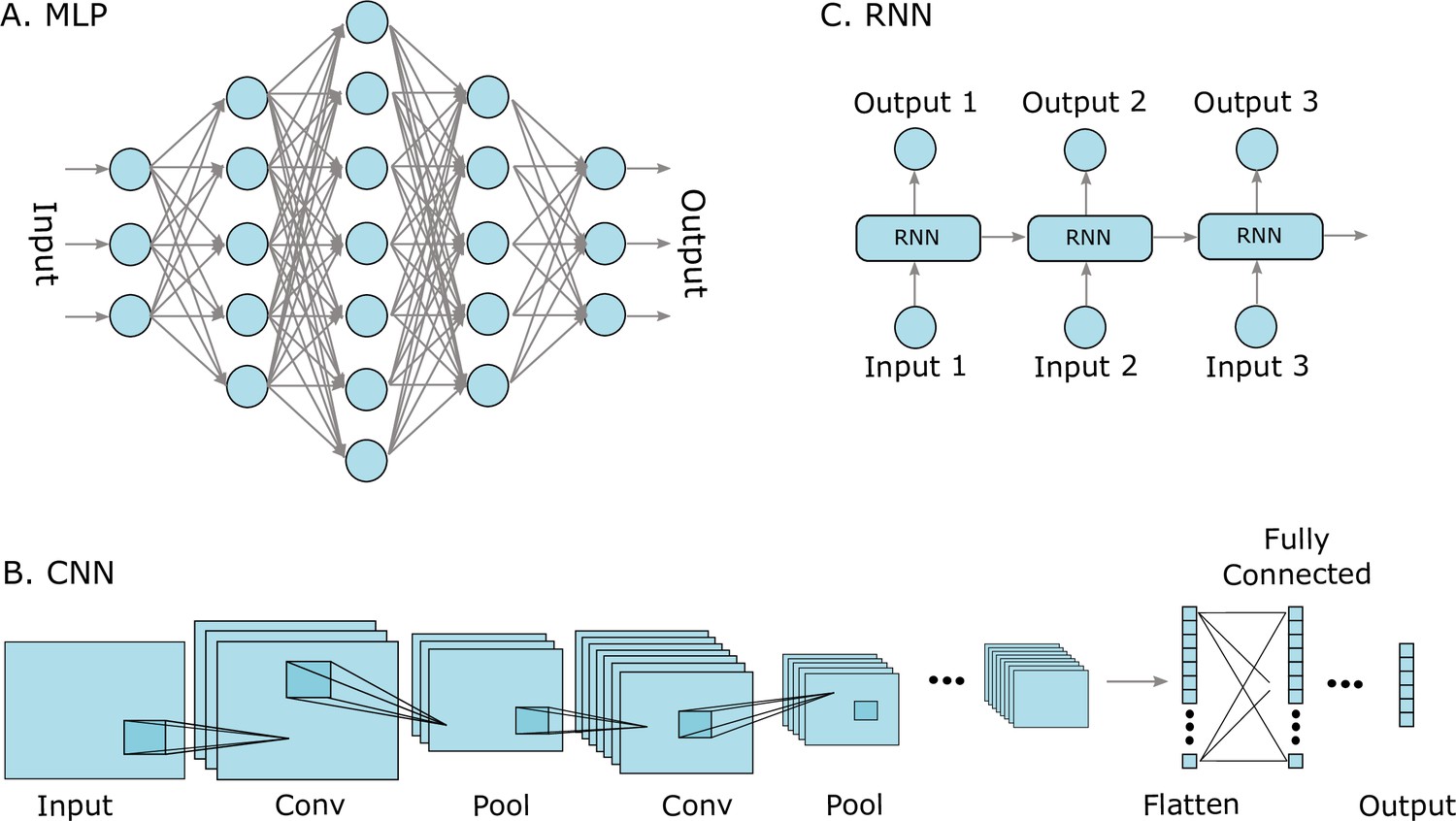

An interpretable ensemble method for deep representation learning - Jiang - Engineering Reports - Wiley Online Library

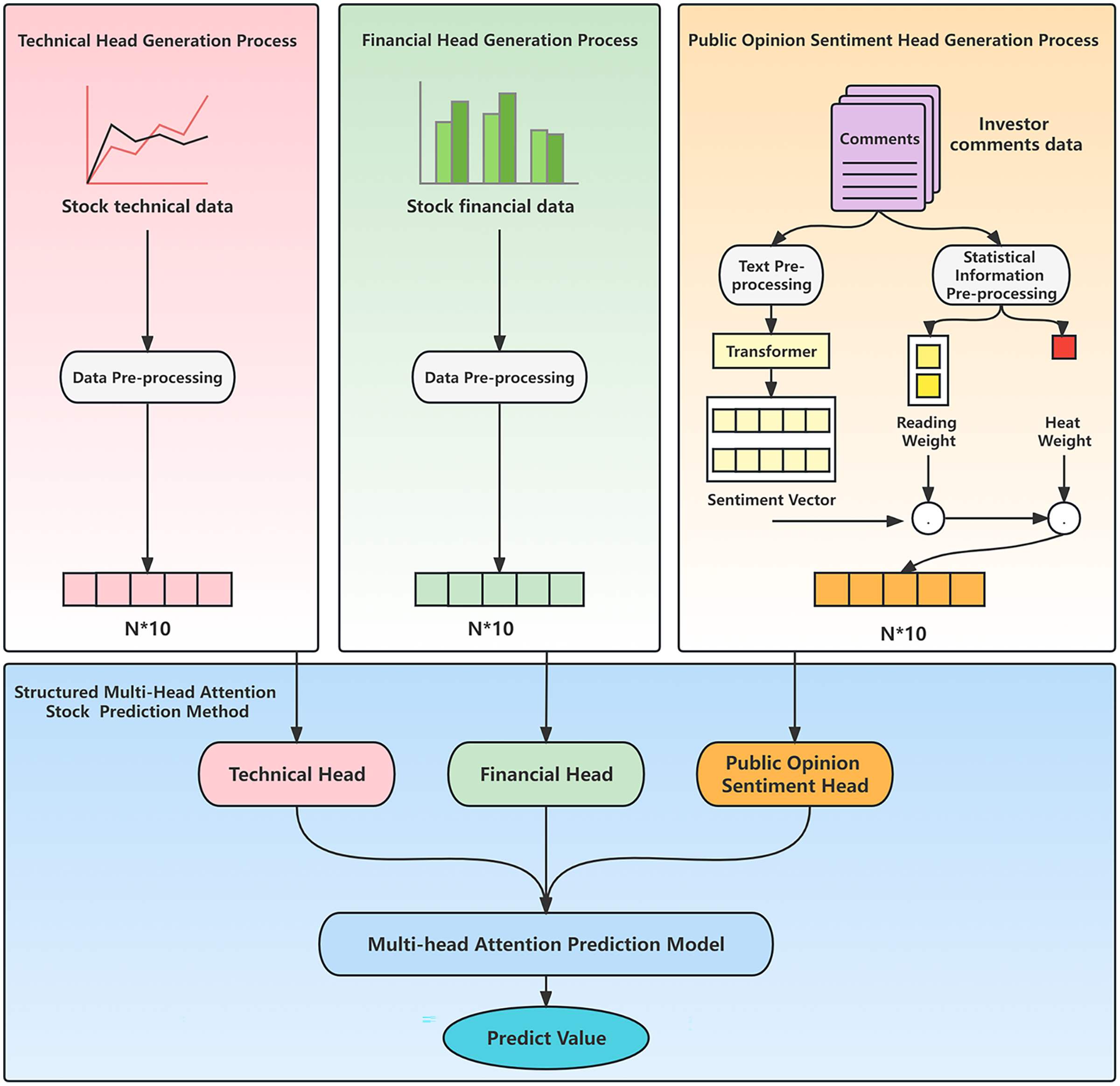

A structured multi-head attention prediction method based on heterogeneous financial data [PeerJ]

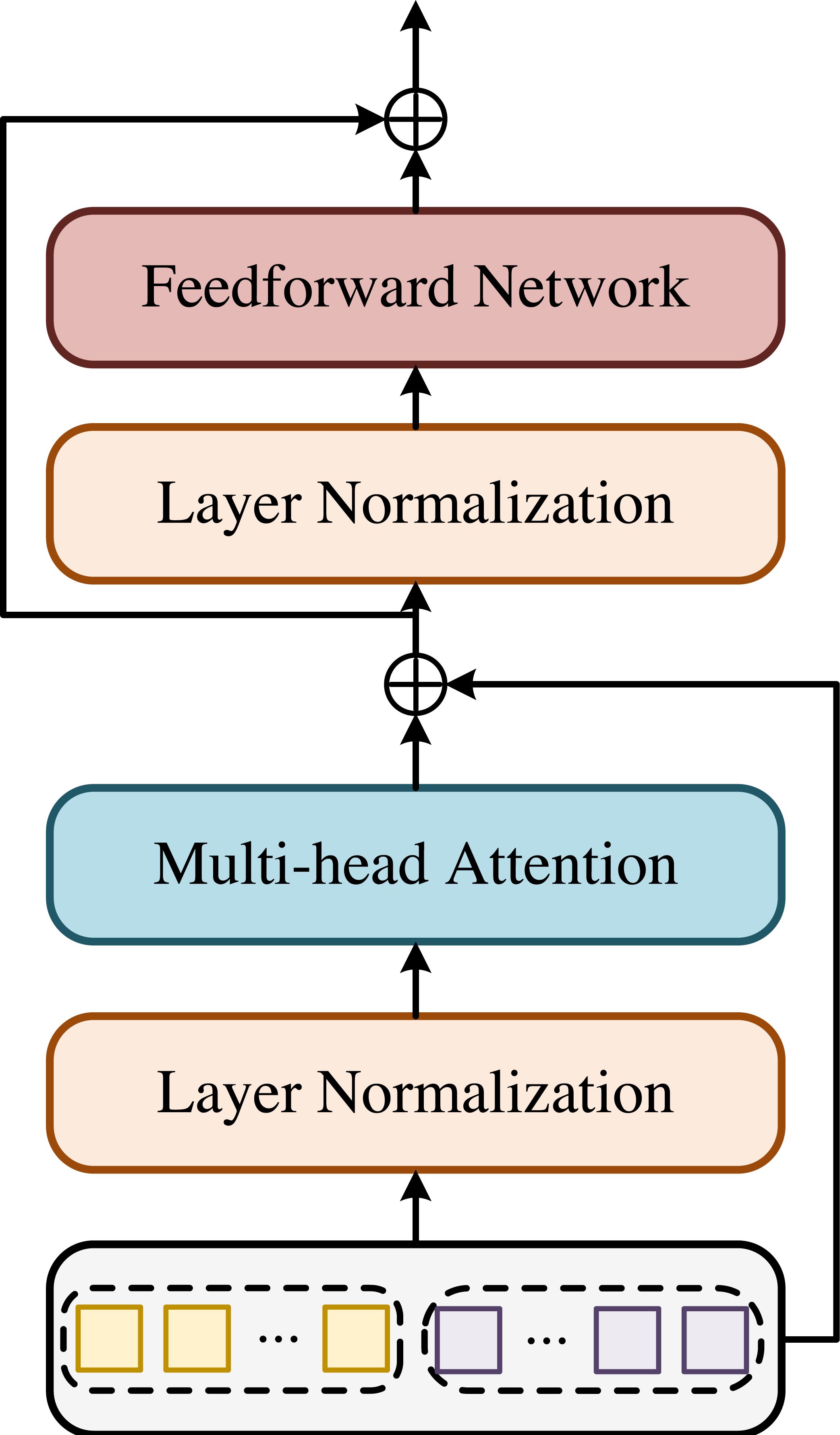

Mockingjay: Unsupervised Speech Representation Learning with Deep Bidirectional Transformer Encoders – arXiv Vanity

Transformer-based deep learning for predicting protein properties in the life sciences

Transformer (machine learning model) - Wikipedia

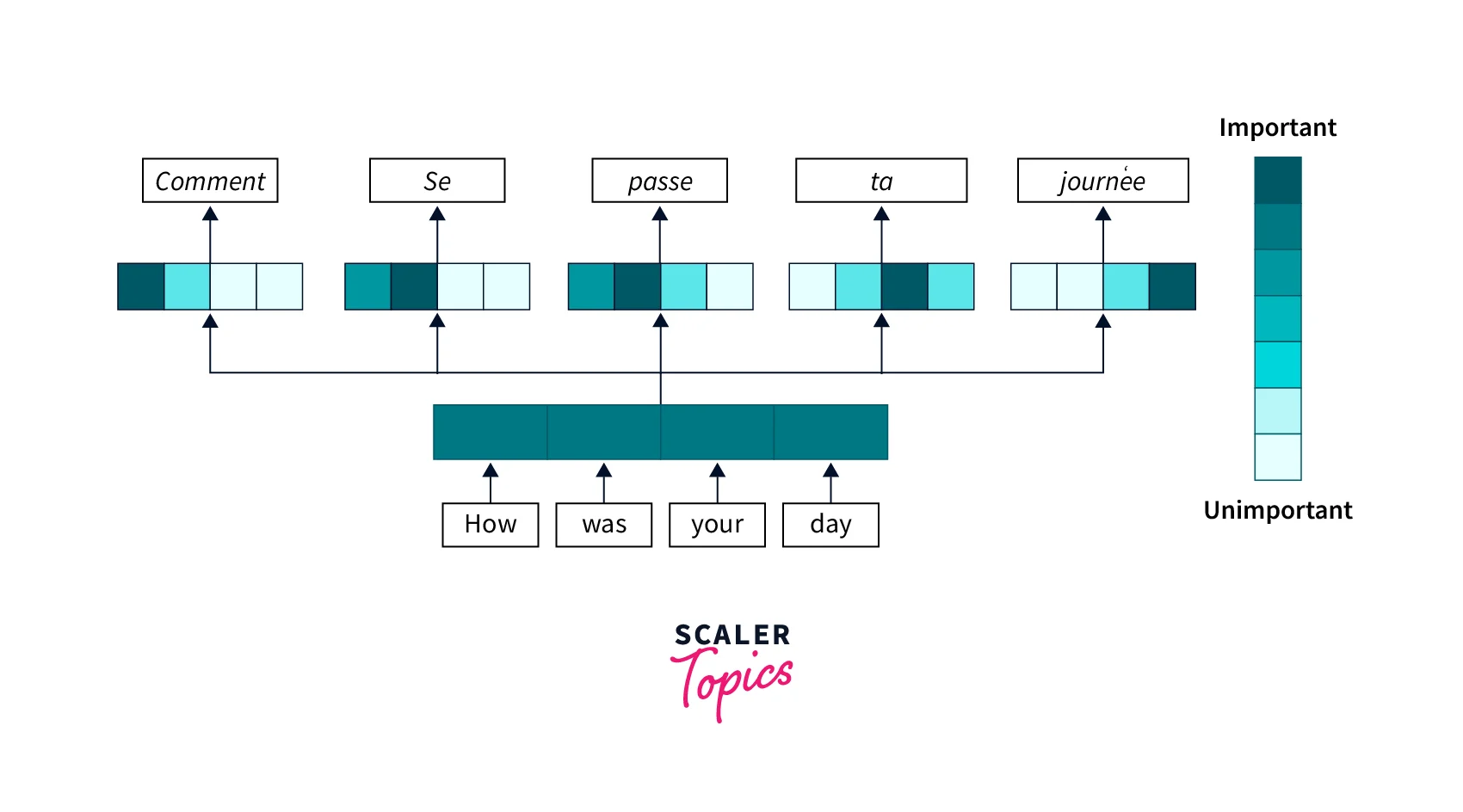

Attention Mechanism in Deep Learning- Scaler Topics

de

por adulto (o preço varia de acordo com o tamanho do grupo)