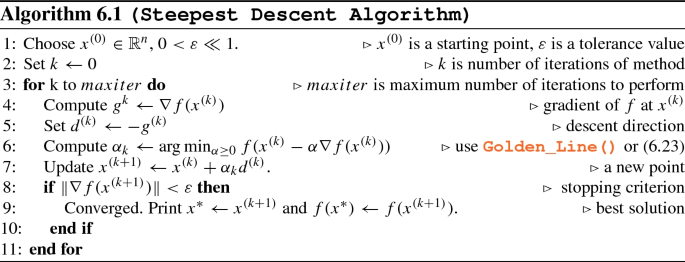

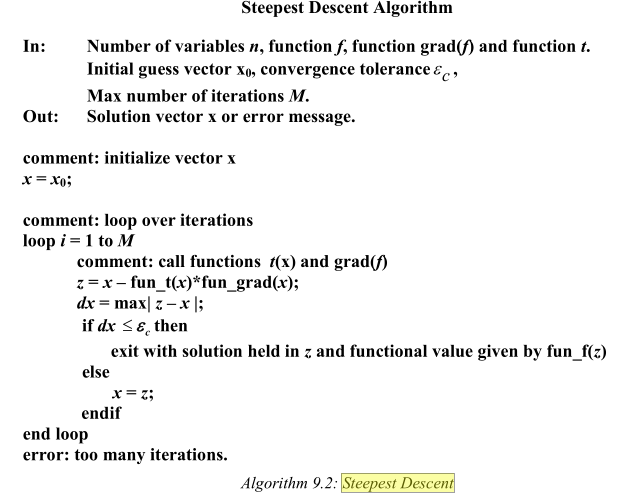

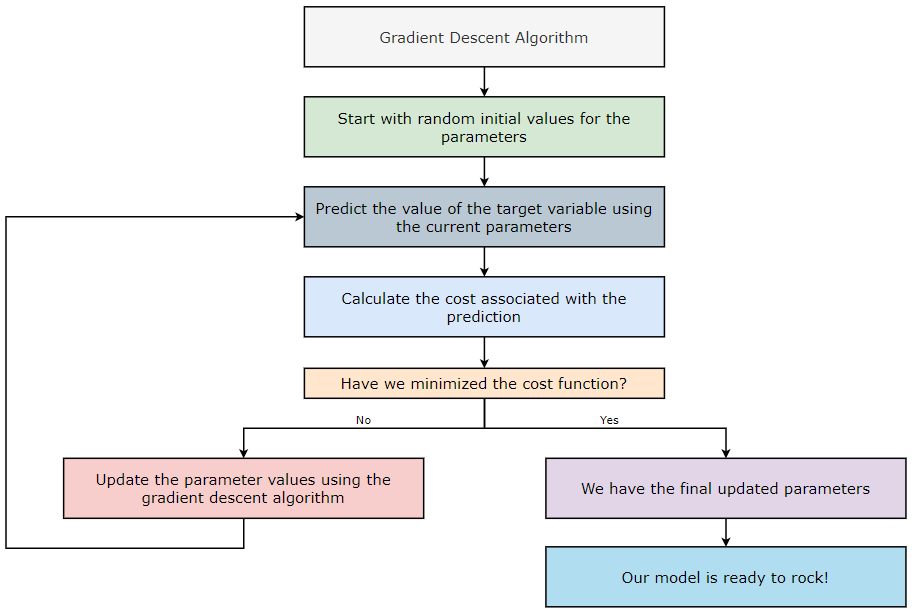

MathType - The #Gradient descent is an iterative optimization #algorithm for finding local minimums of multivariate functions. At each step, the algorithm moves in the inverse direction of the gradient, consequently reducing

Por um escritor misterioso

Descrição

Optimization Techniques used in Classical Machine Learning ft: Gradient Descent, by Manoj Hegde

Implementing Gradient Descent for multilinear regression from scratch., by Gunand Mayanglambam, Analytics Vidhya

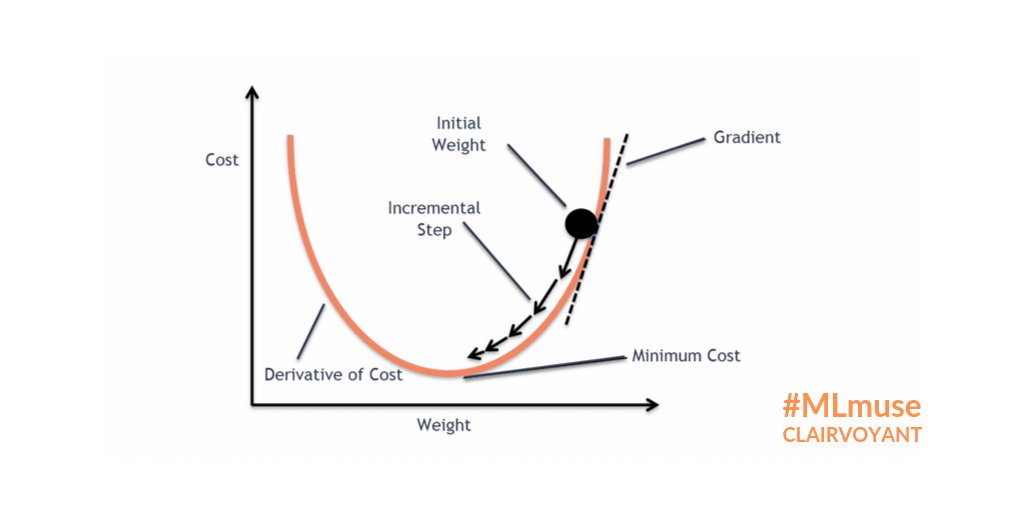

Gradient descent algorithm and its three types

machine learning - Java implementation of multivariate gradient descent - Stack Overflow

Solved] . 4. Gradient descent is a first—order iterative optimisation

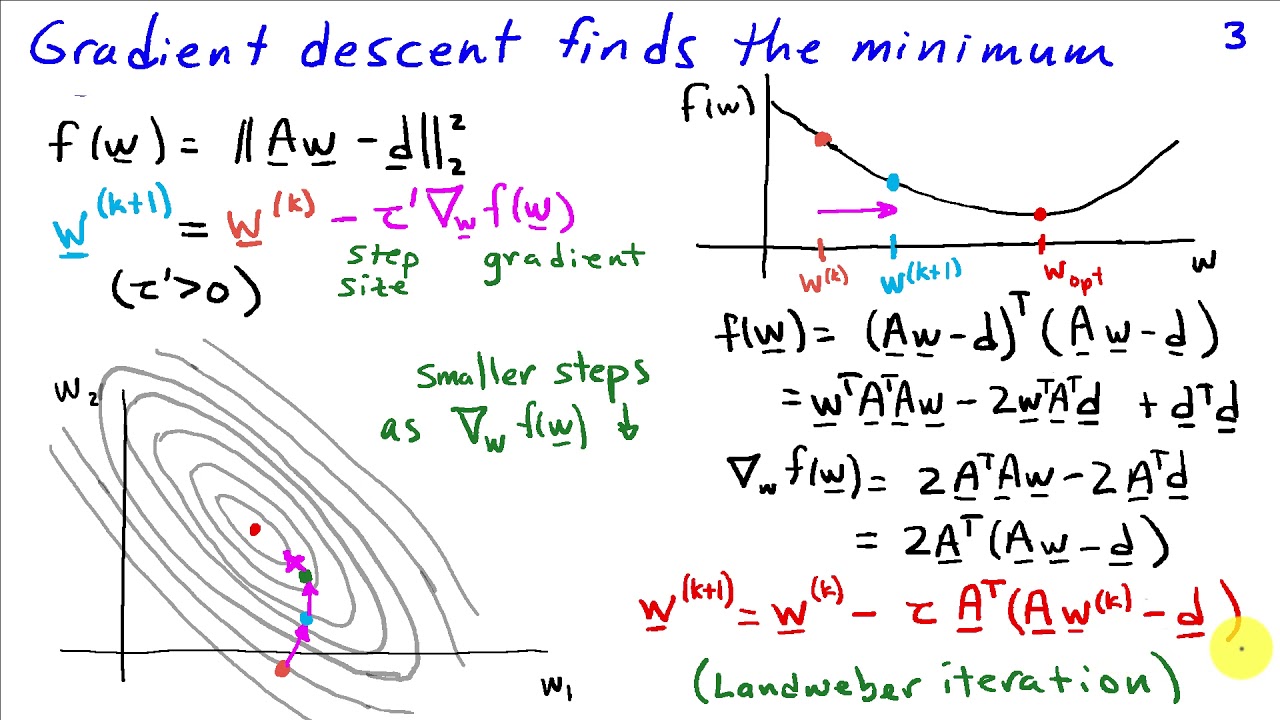

Gradient Descent Solutions to Least Squares Problems

Solved] . 4. Gradient descent is a first—order iterative optimisation

In mathematical optimization, why would someone use gradient descent for a convex function? Why wouldn't they just find the derivative of this function, and look for the minimum in the traditional way?

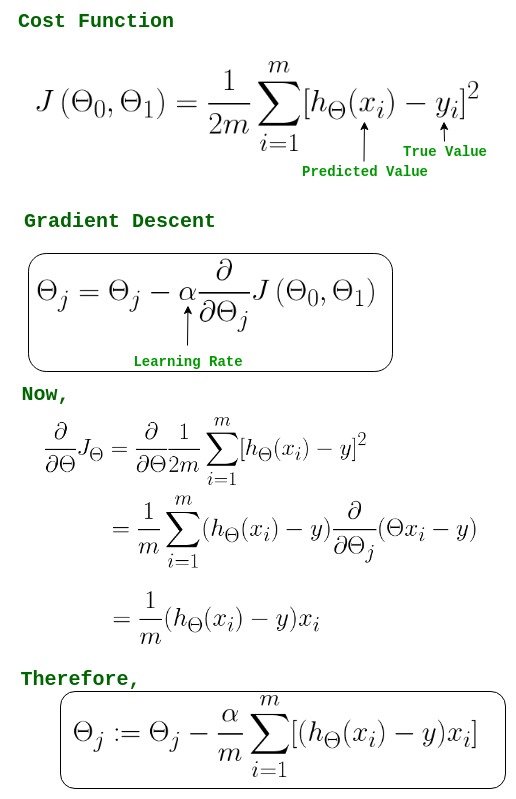

Linear Regression From Scratch PT2: The Gradient Descent Algorithm, by Aminah Mardiyyah Rufai, Nerd For Tech

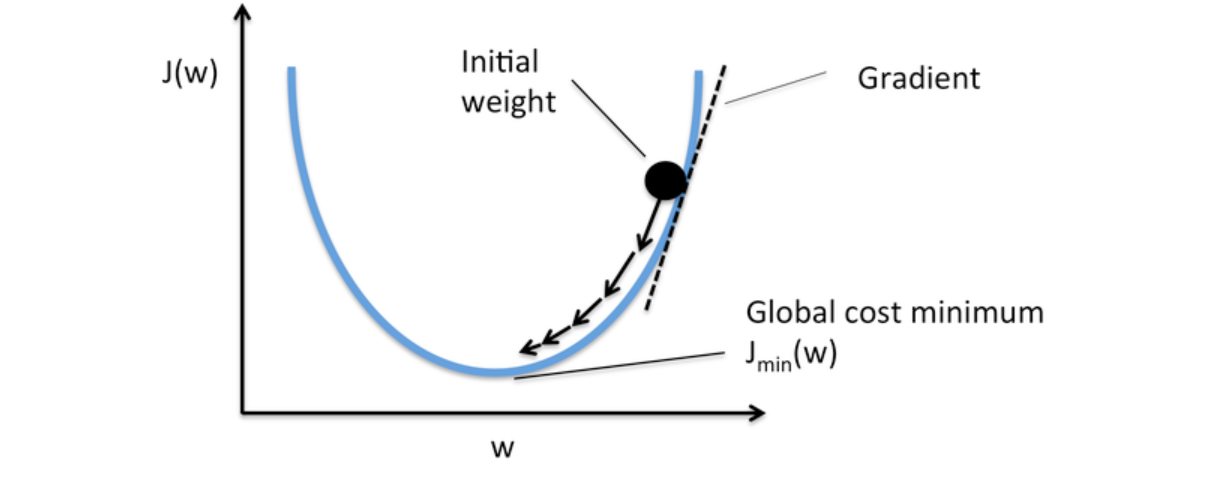

Gradient descent optimization algorithm.

Optimization Techniques used in Classical Machine Learning ft: Gradient Descent, by Manoj Hegde

Optimization Techniques used in Classical Machine Learning ft: Gradient Descent, by Manoj Hegde

Is there a mathematical proof of why the gradient descent algorithm always converges to the global/ local minimum if the learning rate is small enough? - Quora

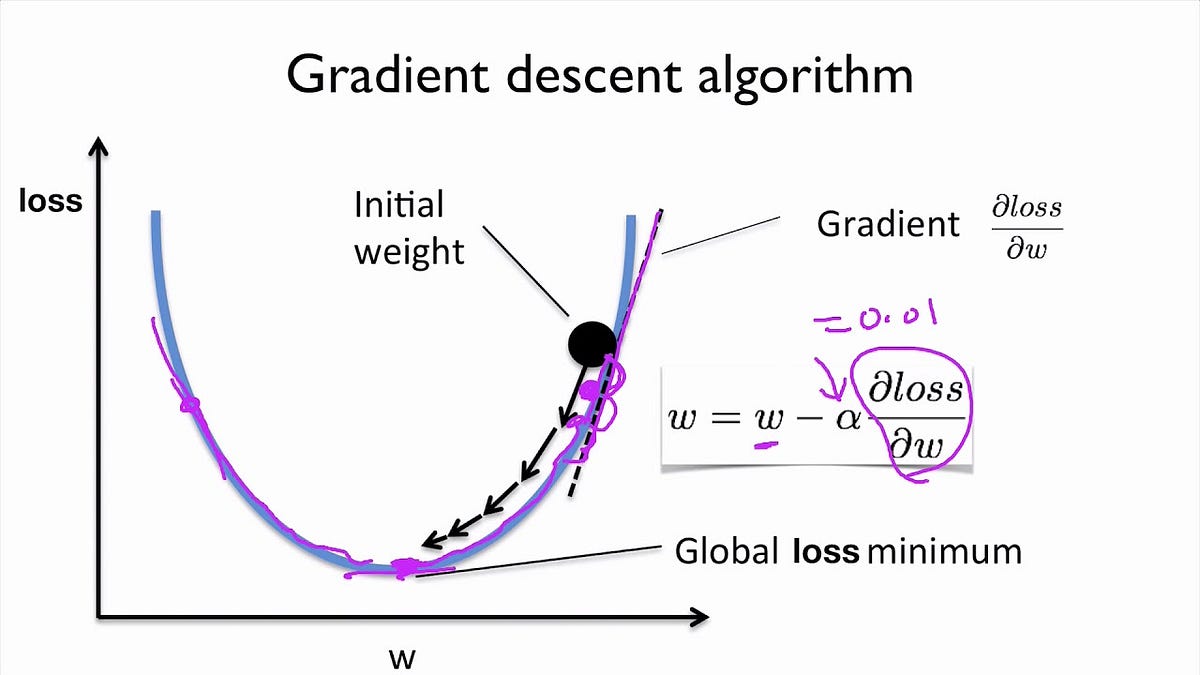

The Gradient Descent Algorithm – Towards AI

Gradient Descent in Linear Regression - GeeksforGeeks

de

por adulto (o preço varia de acordo com o tamanho do grupo)