8 Advanced parallelization - Deep Learning with JAX

Por um escritor misterioso

Descrição

Using easy-to-revise parallelism with xmap() · Compiling and automatically partitioning functions with pjit() · Using tensor sharding to achieve parallelization with XLA · Running code in multi-host configurations

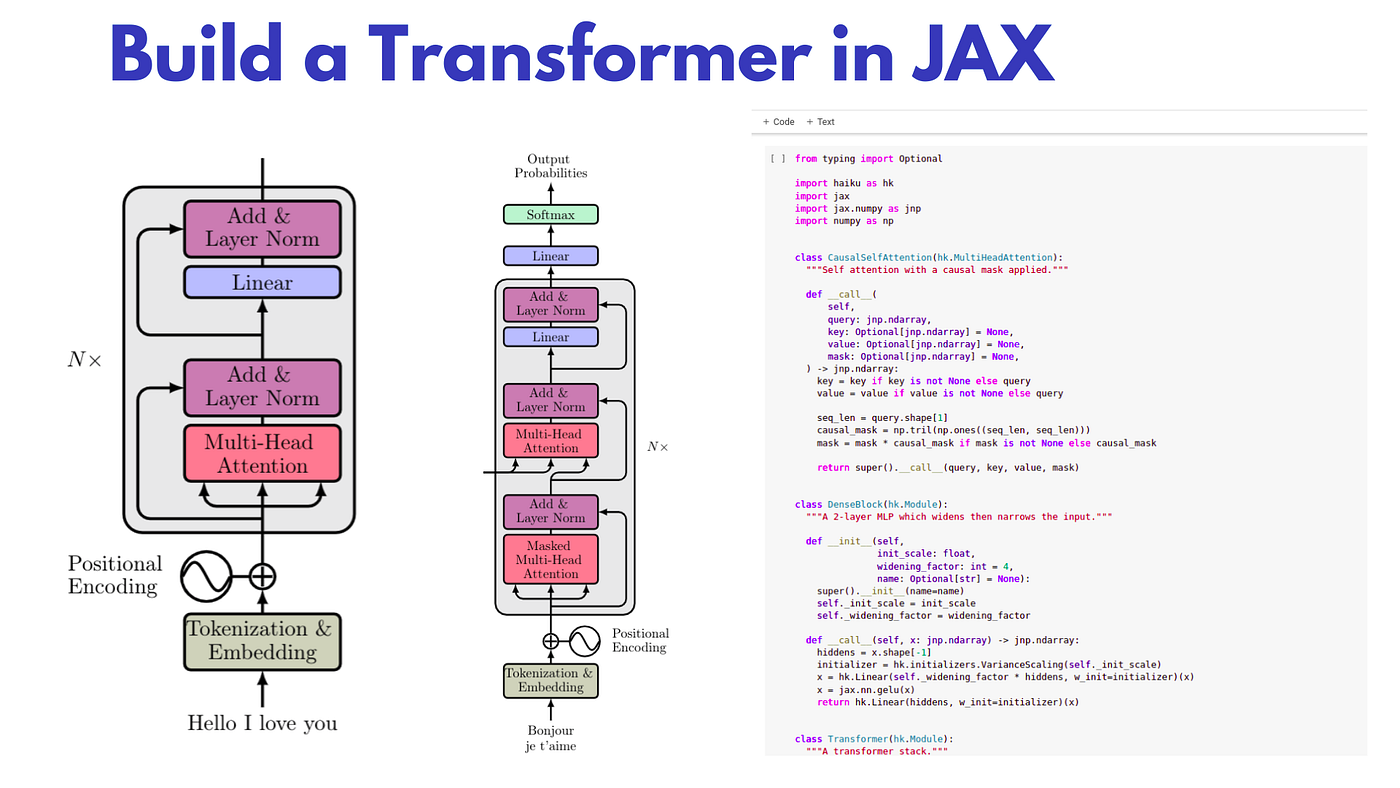

Build a Transformer in JAX from scratch

Efficiently Scale LLM Training Across a Large GPU Cluster with

Intro to JAX for Machine Learning, by Khang Pham

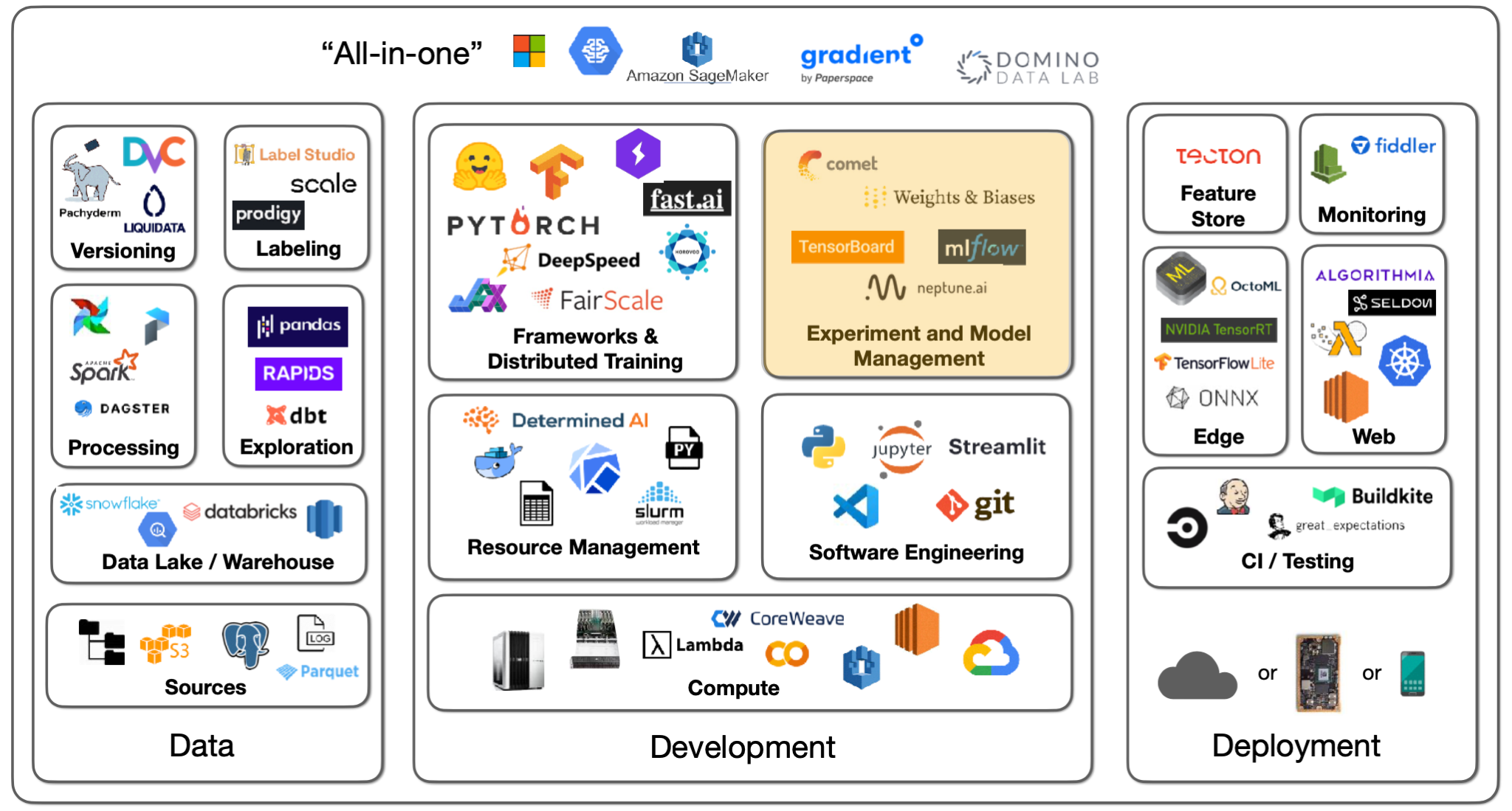

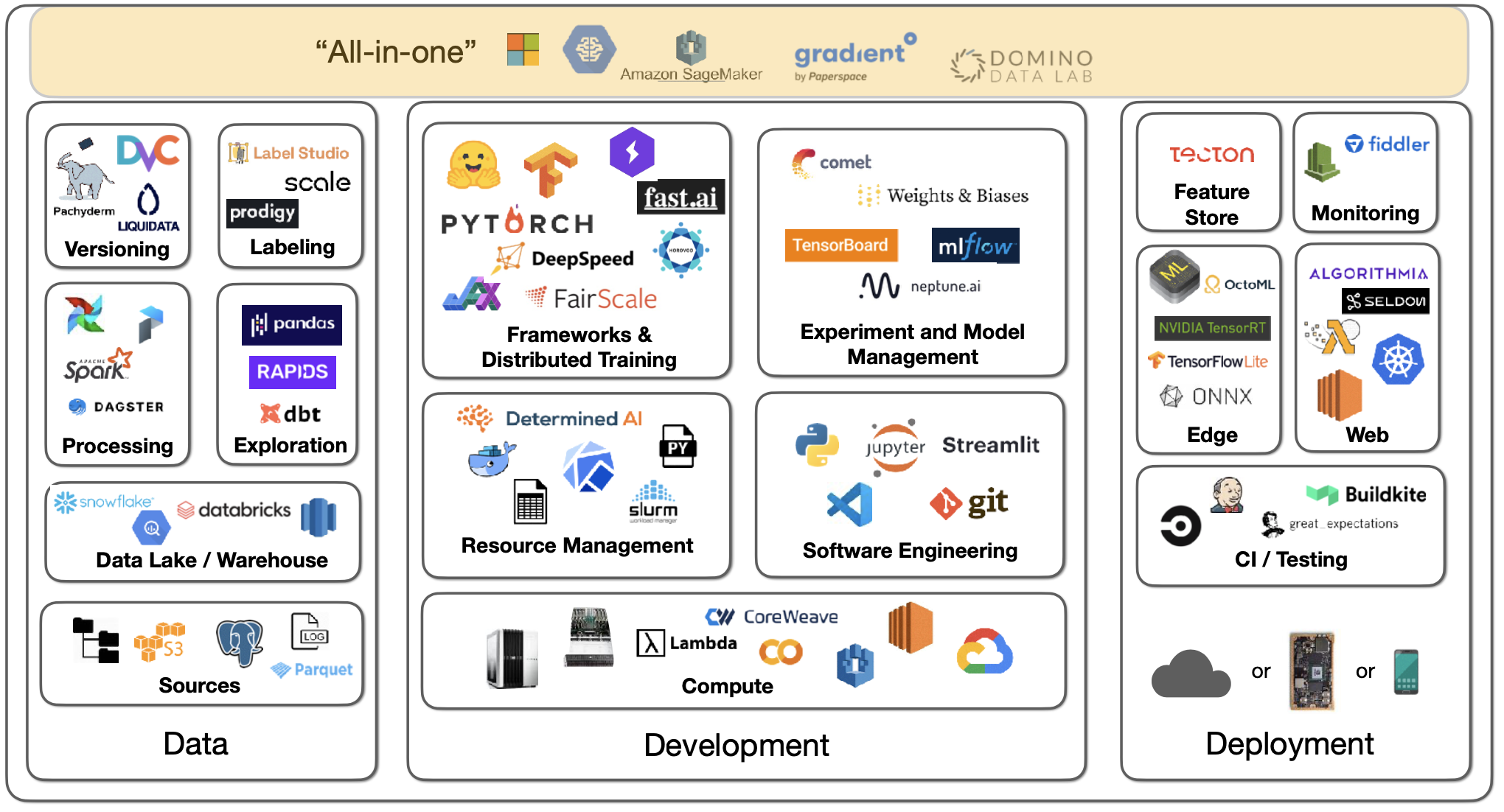

Lecture 2: Development Infrastructure & Tooling - The Full Stack

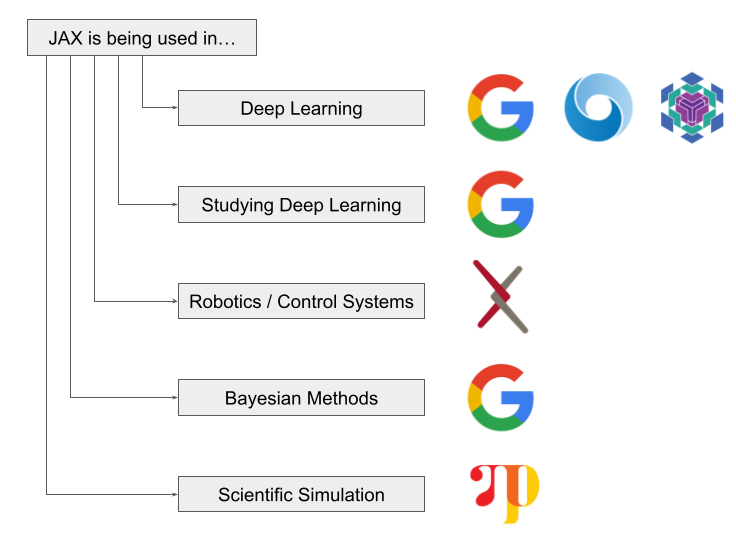

Why You Should (or Shouldn't) be Using Google's JAX in 2023

Using Cloud TPU Multislice to scale AI workloads

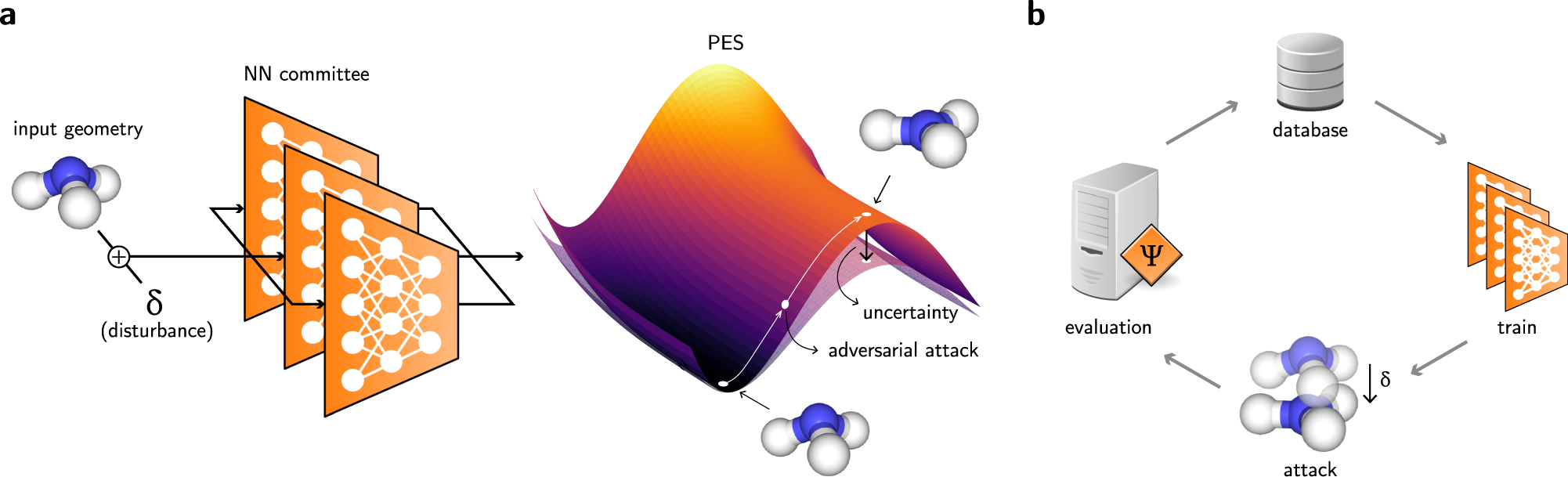

Differentiable sampling of molecular geometries with uncertainty

Why You Should (or Shouldn't) be Using Google's JAX in 2023

Lecture 2: Development Infrastructure & Tooling - The Full Stack

7 Parallelizing your computations - Deep Learning with JAX

What is Google JAX? Everything You Need to Know - Geekflare

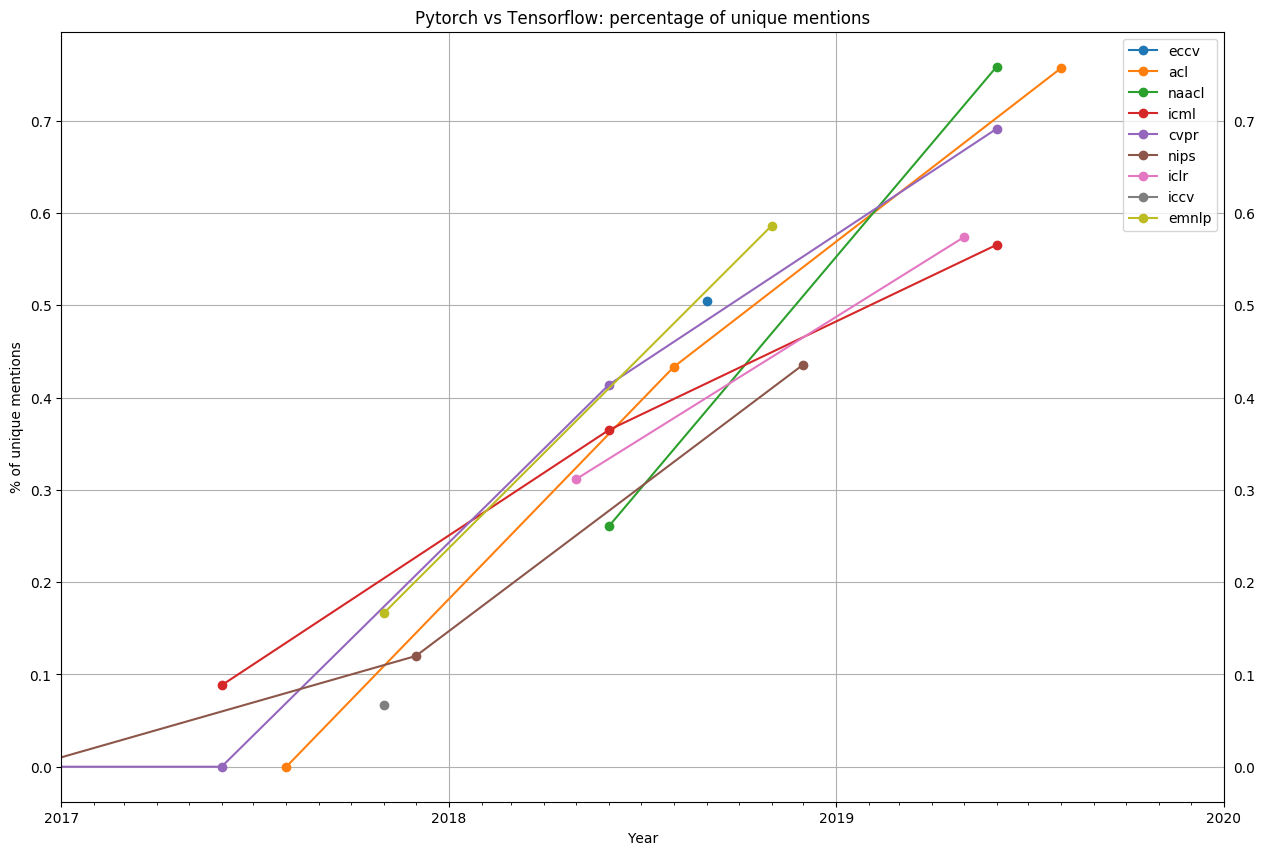

The State of Machine Learning Frameworks in 2019

de

por adulto (o preço varia de acordo com o tamanho do grupo)