H100, L4 and Orin Raise the Bar for Inference in MLPerf

Por um escritor misterioso

Descrição

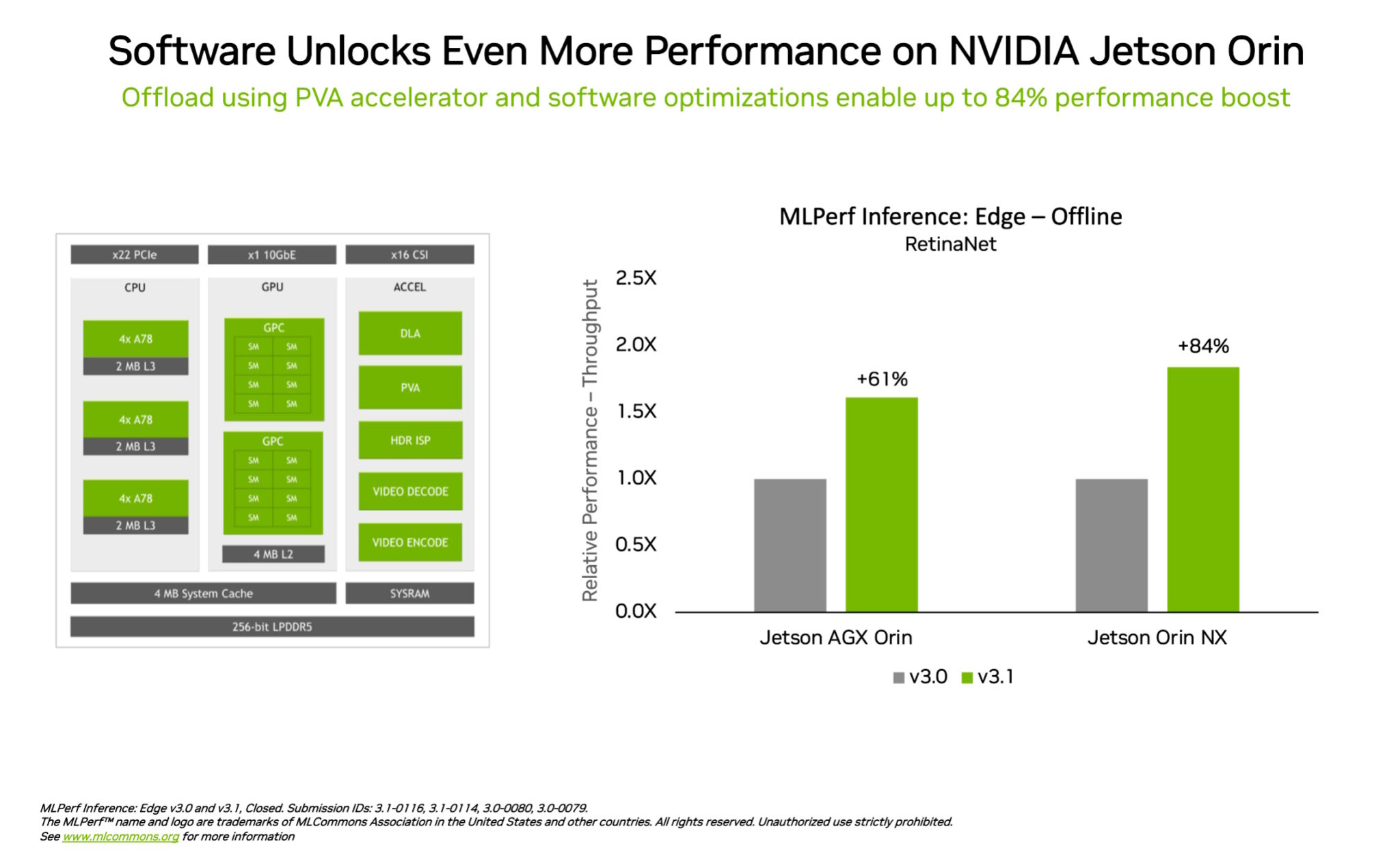

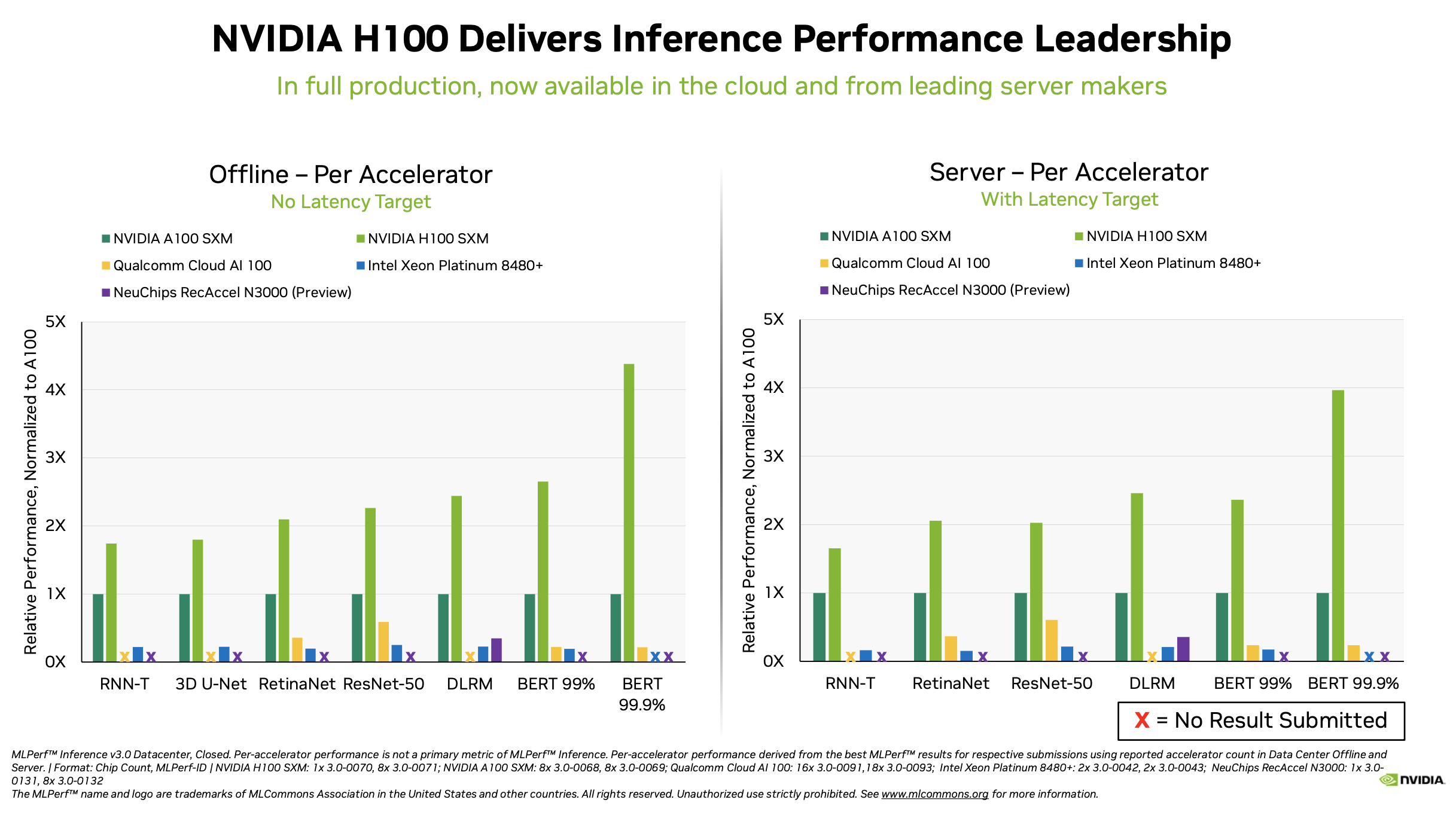

NVIDIA H100 and L4 GPUs took generative AI and all other workloads to new levels in the latest MLPerf benchmarks, while Jetson AGX Orin made performance and efficiency gains.

MLPerf Inference: Startups Beat Nvidia on Power Efficiency

MLPerf Inference 3.0 Highlights - Nvidia, Intel, Qualcomm and…ChatGPT

D] LLM inference energy efficiency compared (MLPerf Inference Datacenter v3.0 results) : r/MachineLearning

Wei Liu on LinkedIn: NVIDIA Hopper, Ampere GPUs Sweep Benchmarks in AI Training

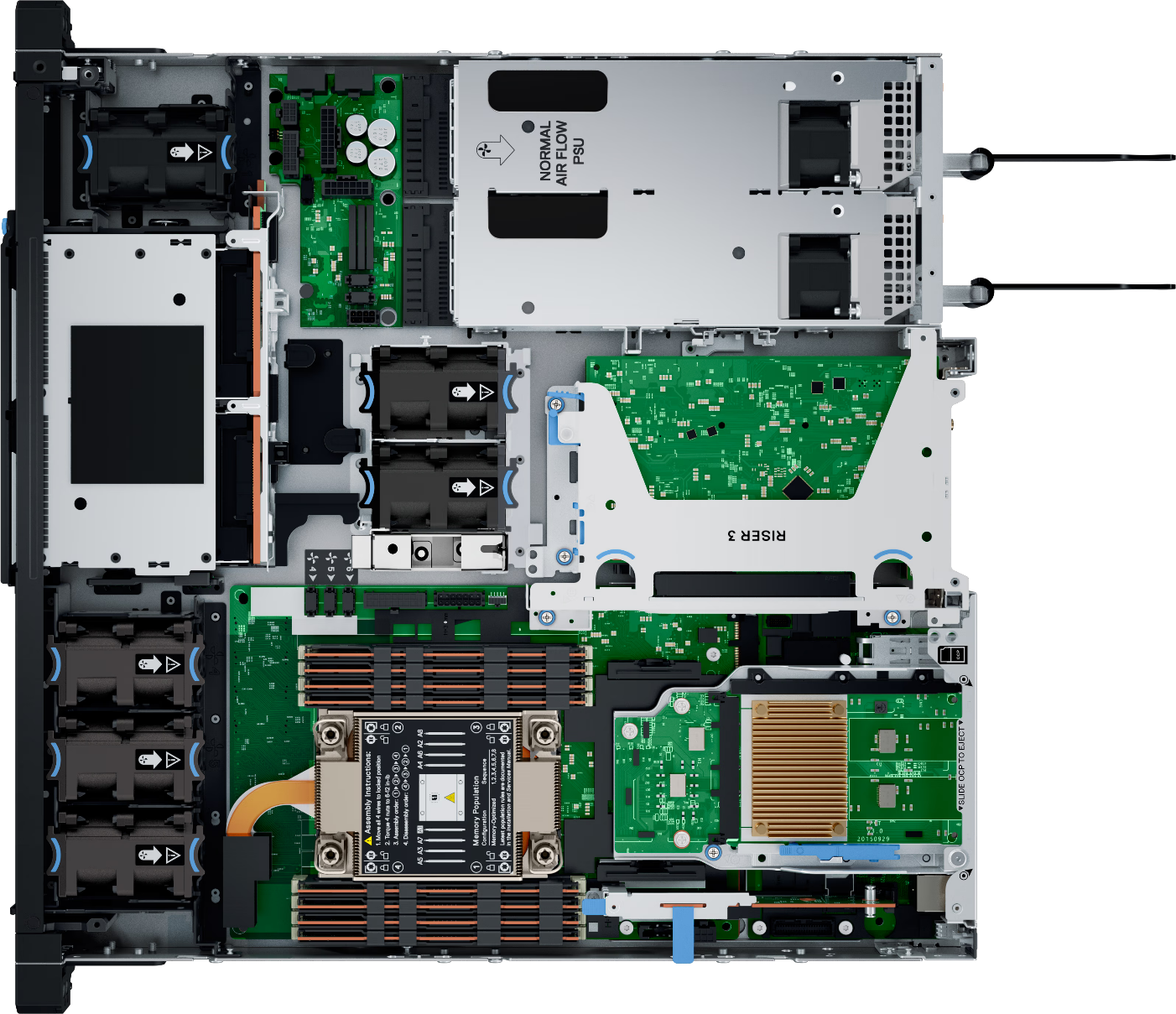

MLPerf™ Inference v3.1 Edge Workloads Powered by Dell PowerEdge Servers

D] LLM inference energy efficiency compared (MLPerf Inference Datacenter v3.0 results) : r/MachineLearning

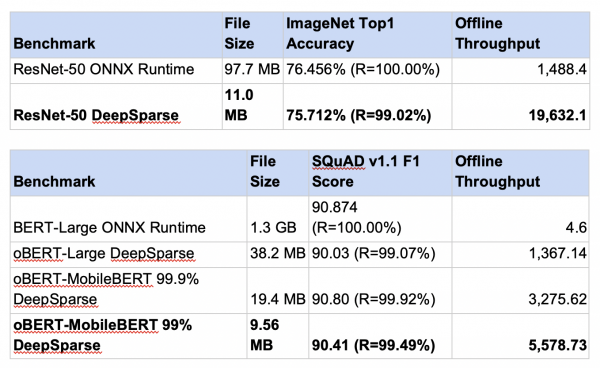

Setting New Records in MLPerf Inference v3.0 with Full-Stack Optimizations for AI

NVIDIA Grace Hopper Superchip Sweeps MLPerf Inference Benchmarks

MLPerf Inference 3.0 Highlights - Nvidia, Intel, Qualcomm and…ChatGPT

de

por adulto (o preço varia de acordo com o tamanho do grupo)